I updated everything to the latest Unity Editor. Also realized I had the wrong shaders on my hairs, those are fixed and the hairs look MUCH better!

37 KiB

UI support

- Setting up UI Input

- Multiplayer UIs

- Virtual mouse cursor control

- UI and game input

- UI Toolkit support

You can use the Input System package to control any in-game UI created with the Unity UI package. The integration between the Input System and the UI system is handled by the InputSystemUIInputModule component.

Note

The Input System package does not support IMGUI. If you have

OnGUImethods in your player code (Editor code is unaffected), Unity does not receive any input events in those methods when the Active Input Handling Player Setting is set to Input System Package. To restore functionality you can change the setting to Both, but this means that Unity processes the input twice.

Setting up UI input

The InputSystemUIInputModule component acts as a drop-in replacement for the StandaloneInputModule component that the Unity UI package. InputSystemUIInputModule provides the same functionality as StandaloneInputModule, but it uses the Input System instead of the legacy Input Manager to drive UI input.

If you have a StandaloneInputModule component on a GameObject, and the Input System is installed, Unity shows a button in the Inspector offering to automatically replace it with a InputSystemUIInputModule for you. The InputSystemUIInputModule is pre-configured to use default Input Actions to drive the UI, but you can override that configuration to suit your needs.

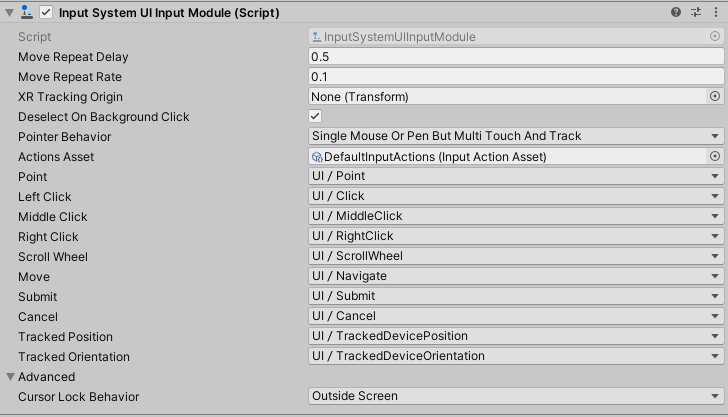

You can use the following properties to configure InputSystemUIInputModule:

| Property | Description |

|---|---|

| Move Repeat Delay | The initial delay (in seconds) between generating an initial IMoveHandler.OnMove navigation event and generating repeated navigation events when the Move Action stays actuated. |

| Move Repeat Rate | The interval (in seconds) between generating repeat navigation events when the Move Action stays actuated. Note that this is capped by the frame rate; there will not be more than one move repeat event each frame so if the frame rate dips below the repeat rate, the effective repeat rate will be lower than this setting. |

| Actions Asset | An Input Action Asset containing all the Actions to control the UI. You can choose which Actions in the Asset correspond to which UI inputs using the following properties. By default, this references a built-in Asset named DefaultInputActions, which contains common default Actions for driving UI. If you want to set up your own Actions, create a custom Input Action Asset and assign it here. When you assign a new Asset reference to this field in the Inspector, the Editor attempts to automatically map Actions to UI inputs based on common naming conventions. |

| Deselect on Background Click | By default, when the pointer is clicked and does not hit any GameObject, the current selection is cleared. This, however, can get in the way of keyboard and gamepad navigation which will want to work off the currently selected object. To prevent automatic deselection, set this property to false. |

| Pointer Behavior | How to deal with multiple pointers feeding input into the UI. See pointer-type input. |

| Cursor Lock Behavior | Controls the origin point of UI raycasts when the cursor is locked. |

You can use the following properties to map Actions from the chosen Actions Asset to UI input Actions. In the Inspector, these appear as foldout lists that contain all the Actions in the Asset:

| Property | Description |

|---|---|

| Point | An Action that delivers a 2D screen position. Use as a cursor for pointing at UI elements to implement mouse-style UI interactions. See pointer-type input. Set to PassThrough Action type and Vector2 value type. |

| Left Click | An Action that maps to the primary cursor button used to interact with UI. See pointer-type input. Set to PassThrough Action type and Button value type. |

| Middle Click | An Action that maps to the middle cursor button used to interact with UI. See pointer-type input. Set to PassThrough Action type and Button value type. |

| Right Click | An Action that maps to the secondary cursor button used to interact with UI. See pointer-type input. Set to PassThrough Action type and Button value type. |

| Scroll Wheel | An Action that delivers gesture input to allow scrolling in the UI. See pointer-type input. Set to PassThrough Action type and Vector2 value type. |

| Move | An Action that delivers a 2D vector used to select the currently active UI selectable. This allows a gamepad or arrow-key style navigation of the UI. See navigation-type input. Set to PassThrough Action type and Vector2 value type. |

| Submit | An Action to engage with or "click" the currently selected UI selectable. See navigation-type input. Set to Button Action type. |

| Cancel | An Action to exit any interaction with the currently selected UI selectable. See navigation-type input. Set to Button Action type. |

| Tracked Device Position | An Action that delivers a 3D position of one or multiple spatial tracking devices, such as XR hand controllers. In combination with Tracked Device Orientation, this allows XR-style UI interactions by pointing at UI selectables in space. See tracked-type input. Set to PassThrough Action type and Vector3 value type. |

| Tracked Device Orientation | An Action that delivers a Quaternion representing the rotation of one or multiple spatial tracking devices, such as XR hand controllers. In combination with Tracked Device Position, this allows XR-style UI interactions by pointing at UI selectables in space. See tracked-type input.Set to PassThrough Action type and Quaternion value type. |

How the bindings work

The UI input module can deal with three different types of input:

- pointer-type input,

- navigation-type input, and

- tracked-type input.

For each of these types of input, input is sourced and combined from a specific set of Actions as detailed below.

Pointer-type input

To the UI, a pointer is a position from which clicks and scrolls can be triggered to interact with UI elements at the pointer's position. Pointer-type input is sourced from point, leftClick, rightClick, middleClick, and scrollWheel.

Note

The UI input module does not have an association between pointers and cursors. In general, the UI is oblivious to whether a cursor exists for a particular pointer. However, for mouse and pen input, the UI input module will respect Cusor.lockState and pin the pointer position at

(-1,-1)whenever the cursor is locked. This behavior can be changed through the Cursor Lock Behavior property of the InputSystemUIInputModule.

Multiple pointer Devices may feed input into a single UI input module. Also, in the case of Touchscreen, a single Device can have the ability to have multiple concurrent pointers (each finger contact is one pointer).

Important

Because multiple pointer Devices can feed into the same set of Actions, it is important to set the action type to PassThrough. This ensures that no filtering is applied to input on these actions and that instead every input is relayed as is.

From the perspective of InputSystemUIInputModule, each InputDevice that has one or more controls bound to one of the pointer-type actions is considered a unique pointer. Also, for each Touchscreen devices, each separate TouchControl that has one or more of its controls bound to the those actions is considered its own unique pointer as well. Each pointer receives a unique pointerId which generally corresponds to the deviceId of the pointer. However, for touch, this will be a combination of deviceId and touchId. Use ExtendedPointerEventData.touchId to find the ID for a touch event.

You can influence how the input module deals with concurrent input from multiple pointers using the Pointer Behavior setting.

| Pointer Behavior | Description |

|---|---|

| Single Mouse or Pen But Multi Touch And Track | Behaves like Single Unified Pointer for all input that is not classified as touch or tracked input, and behaves like All Pointers As Is for tracked and touch input. If concurrent input is received on a Mouse and Pen, for example, the input of both is fed into the same UI pointer instance. The position input of one will overwrite the position of the other.Note that when input is received from touch or tracked devices, the single unified pointer for mice and pens is removed including IPointerExit events being sent in case the mouse/pen cursor is currently hovering over objects. This is the default behavior. |

| Single Unified Pointer | All pointer input is unified such that there is only ever a single pointer. This includes touch and tracked input. This means, for example, that regardless how many devices feed input into Point, only the last such input in a frame will take effect and become the current UI pointer's position. |

| All Pointers As Is | The UI input module will not unify any pointer input. Any device, including touch and tracked devices that feed input pointer-type actions, will be its own pointer (or multiple pointers for touch input). Note: This might mean that there will be an arbitrary number of pointers in the UI, and several objects might be pointed at concurrently. |

Note

If you bind a device to a pointer-type action such as Left Click without also binding it to Point, the UI input module will recognize the device as not being able to point and try to route its input into that of another pointer. For example, if you bind Left Click to the

Spacekey and Point to the position of the mouse, then pressing the space bar will result in a left click at the current position of the mouse.

For pointer-type input (as well as for [tracked-type input](#tracked-type input)), InputSystemUIInputModule will send ExtendedPointerEventData instances which are an extended version of the base PointerEventData. These events contain additional data such as the device and pointer type which the event has been generated from.

Navigation-type input

Navigation-type input controls the current selection based on motion read from the move action. Additionally, input from

submit will trigger ISubmitHandler on the currently selected object and

cancel will trigger ICancelHandler on it.

Unlike with [pointer-type](#pointer-type input), where multiple pointer inputs may exist concurrently (think two touches or left- and right-hand tracked input), navigation-type input does not have multiple concurrent instances. In other words, only a single move vector and a single submit and cancel input will be processed by the UI module each frame. However, these inputs need not necessarily come from one single Device always. Arbitrary many inputs can be bound to the respective actions.

Important

While, move should be set to PassThrough Action type, it is important that submit and cancel be set to the Button Action type.

Navigation input is non-positional, that is, unlike with pointer-type input, there is no screen position associcated with these actions. Rather, navigation actions always operate on the current selection.

Tracked-type input

Input from tracked devices such as XR controllers and HMDs essentially behaves like pointer-type input. The main difference is that the world-space device position and orientation sourced from trackedDevicePosition and trackedDeviceOrientation is translated into a screen-space position via raycasting.

Important

Because multiple tracked Devices can feed into the same set of Actions, it is important to set the action type to PassThrough. This ensures that no filtering is applied to input on these actions and that instead every input is relayed as is.

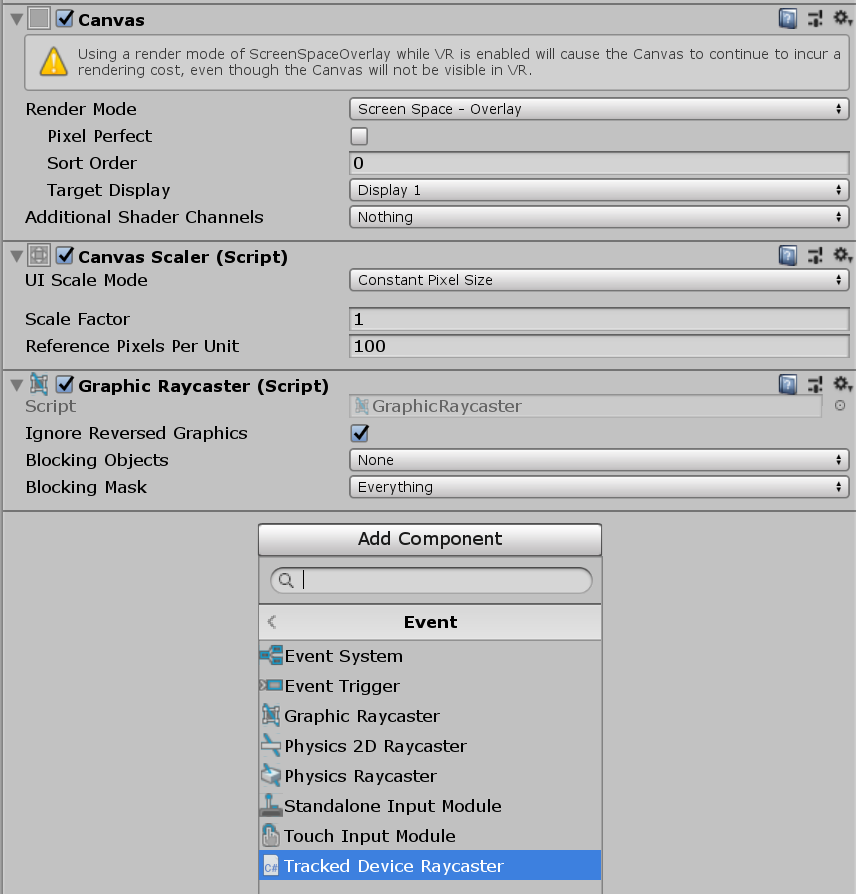

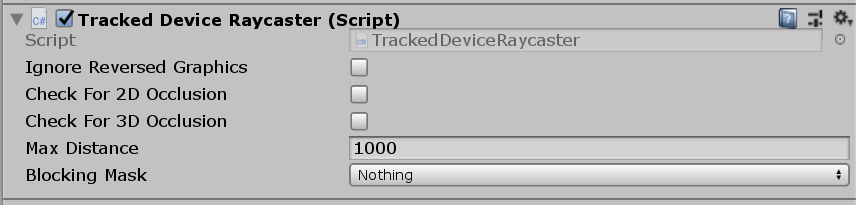

For this raycasting to work, you need to add TrackedDeviceRaycaster to the GameObject that has the UI's Canvas component. This GameObject will usually have a GraphicRaycaster component which, however, only works for 2D screen-space raycasting. You can put TrackedDeviceRaycaster alongside GraphicRaycaster and both can be enabled at the same time without advserse effect.

Clicks on tracked devices do not differ from other pointer-type input. Therefore, actions such as Left Click work for tracked devices just like they work for other pointers.

Multiplayer UIs

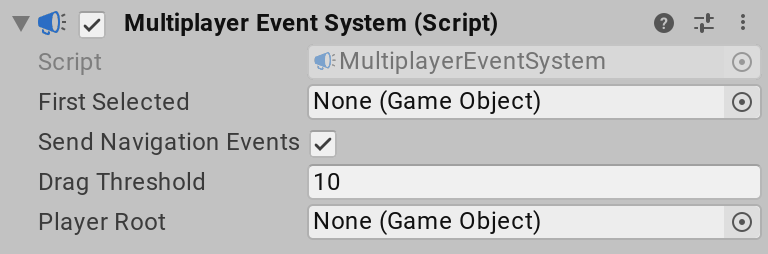

The Input System can also handle multiple separate UI instances on the screen controlled separately from different input Bindings. This is useful if you want to have multiple local players share a single screen with different controllers, so that every player can control their own UI instance. To allow this, you need to replace the EventSystem component from Unity with the Input System's MultiplayerEventSystem component.

Unlike the EventSystem component, you can have multiple MultiplayerEventSystems active in the Scene at the same time. That way, you can have multiple players, each with their own InputSystemUIInputModule and MultiplayerEventSystem components, and each player can have their own set of Actions driving their own UI instance. If you are using the PlayerInput component, you can also set up PlayerInput to automatically configure the player's InputSystemUIInputModule to use the player's Actions. See the documentation on PlayerInput to learn how.

The properties of the MultiplayerEventSystem component are identical with those from the Event System. Additionally, the MultiplayerEventSystem component adds a playerRoot property, which you can set to a GameObject that contains all the UI selectables this event system should handle in its hierarchy. Mouse input that this event system processes then ignores any UI selectables which are not on any GameObject in the Hierarchy under Player Root.

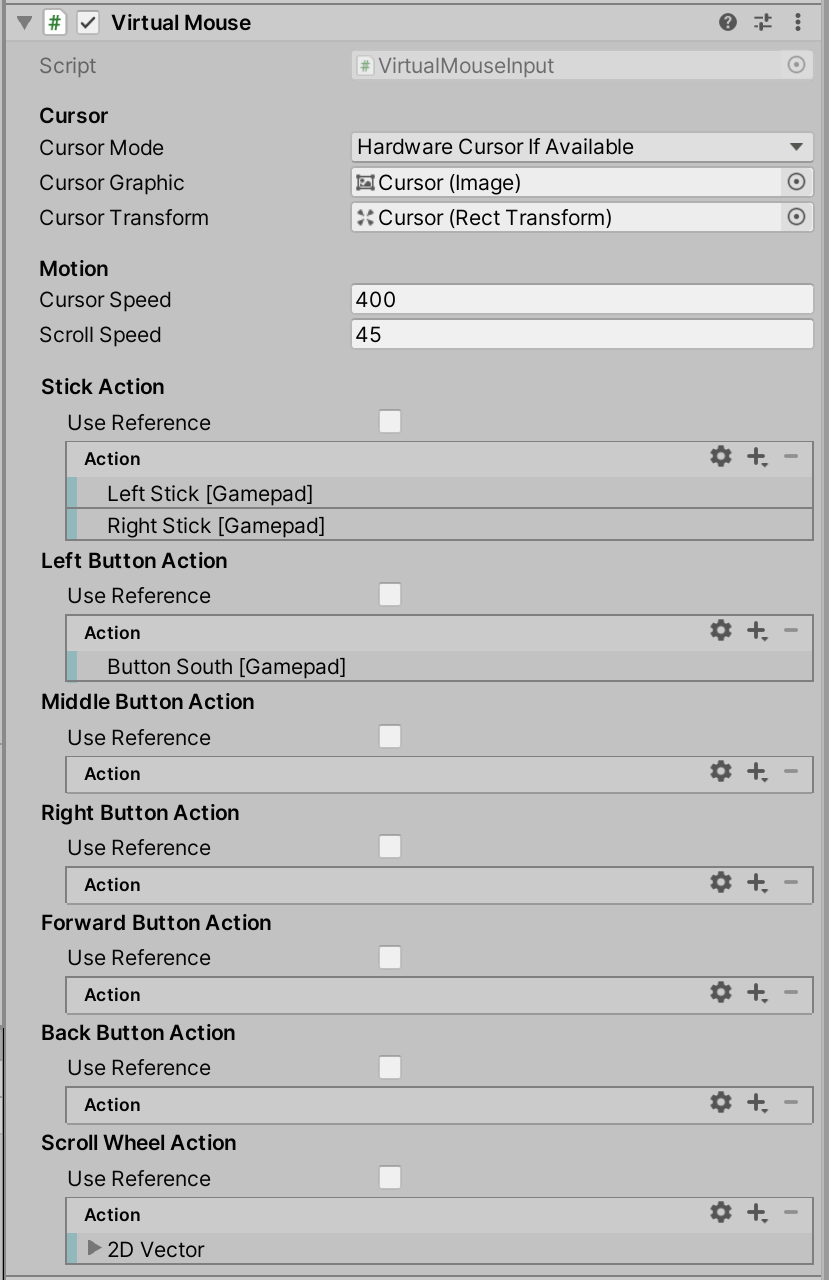

Virtual mouse cursor control

Note

While pointer input generated from a

VirtualMouseInputcomponent is received in UI Toolkit, theVirtualMouseInputcomponent is not officially supported for use with UI Toolkit. At the moment, it only works in combination with the Unity UI system.

If your application uses gamepads and joysticks as an input, you can use the navigation Actions to operate the UI. However, it usually involves extra work to make the UI work well with navigation. An alternative way to operate the UI is to allow gamepads and joysticks to drive the cursor from a "virtual mouse cursor".

Tip

To see an example of a VirtualMouseInput setup, see the Gamepad Mouse Cursor sample included with the Input System package.

To set this up, follow these steps:

- Create a UI

GameObjectwith anImagecomponent. This represents a software mouse cursor. Then, add it as a child of theCanvasthat the cursor should operate on. Set the anchor position of the GameObject'sRectTransformto the bottom left. Make it the last child of theCanvasso that the cursor draws on top of everything else. - Add a VirtualMouseInput component to the GameObject. Then, link the

Imagecomponent to theCursor Graphicproperty, and theRectTransformof the cursor GameObject to theCursor Transformproperty. - If you want the virtual mouse to control the system mouse cursor, set Cursor Mode to

Hardware Cursor If Available. In this mode, theCursor Graphicis hidden when a systemMouseis present and you use Mouse.WarpCursorPosition to move the system mouse cursor instead of the software cursor. The transform linked throughCursor Transformis not updated in that case. - To configure the input to drive the virtual mouse, either add bindings on the various actions (such as

Stick Action), or enableUse Referenceand link existing actions from an.inputactionsasset.

Important

Make sure that the

InputSystemUIInputModuleon the UI'sEventSystemdoes not receive navigation input from the same devices that feed intoVirtualMouseInput. If, for example,VirtualMouseInputis set up to receive input from gamepads, andMove,Submit, andCancelonInputSystemUIInputModuleare also linked to the gamepad, then the UI receives input from the gamepad on two channels.

At runtime, the component adds a virtual Mouse device which the InputSystemUIInputModule component picks up. The controls of the Mouse are fed input based on the actions configured on the VirtualMouseInput component.

Note that the resulting Mouse input is visible in all code that picks up input from the mouse device. You can therefore use the component for mouse simulation elsewhere, not just with InputSystemUIInputModule.

Note

Do not set up gamepads and joysticks for navigation input while using

VirtualMouseInput. If bothVirtualMouseInputand navigation are configured, input is triggered twice: once via the pointer input path, and once via the navigation input path. If you encounter problems such as where buttons are pressed twice, this is likely the problem.

UI and game input

Note

A sample called

UI vs Game Inputis provided with the package and can be installed from the Unity Package Manager UI in the editor. The sample demonstrates how to deal with a situation where ambiguities arise between inputs for UI and inputs for the game.

UI in Unity consumes input through the same mechanisms as game/player code. Right now, there is no mechanism that implicitly ensures that if a certain input – such as a click – is consumed by the UI, it is not also "consumed" by the game. This can create ambiguities between, for example, code that responds to UI.Button.onClick and code that responds to InputAction.performed of an Action bound to <Mouse>/leftButton.

Whether such ambiguities exist depends on how UIs are used. In the following scenarios, ambiguities are avoided:

- All interaction is performed through UI elements. A 2D/3D scene is rendered in the background but all interaction is performed through UI events (including those such as 'background' clicks on the

Canvas). - UI is overlaid over a 2D/3D scene but the UI elements cannot be interacted with directly.

- UI is overlaid over a 2D/3D scene but there is a clear "mode" switch that determines if interaction is picked up by UI or by the game. For example, a first-person game on desktop may employ a cursor lock and direct input to the game while it is engaged whereas it may leave all interaction to the UI while the lock is not engaged.

When ambiguities arise, they do so differently for pointer-type and navigation-type.

Handling ambiguities for pointer-type input

Note

Calling

EventSystem.IsPointerOverGameObjectfrom withinInputActioncallbacks such asInputAction.performedwill lead to a warning. The UI updates separately after input processing and UI state thus corresponds to that of the last frame/update while input is being processed.

Input from pointers (mice, touchscreens, pens) can be ambiguous depending on whether or not the pointer is over a UI element when initiating an interaction. For example, if there is a button on screen, then clicking on the button may lead to a different outcome than clicking outside of the button and within the game scene.

If all pointer input is handled via UI events, no ambiguities arise as the UI will implicitly route input to the respective receiver. If, however, input within the UI is handled via UI events and input in the game is handled via Actions, pointer input will by default lead to both being triggered.

The easiest way to resolve such ambiguities is to respond to in-game actions by polling from inside MonoBehaviour.Update methods and using EventSystem.IsPointerOverGameObject to find out whether the pointer is over UI or not. Another way is to use EventSystem.RaycastAll to determine if the pointer is currently over UI.

Handling ambiguities for navigation-type input

Ambiguities for navigation-type Devices such as gamepads and joysticks (but also keyboards) cannot arise the same way that it does for pointers. Instead, your application has to decide explicitly whether to use input for the UI's Move, Submit, and Cancel inputs or for the game. This can be done by either splitting control on a Device or by having an explicit mode switch.

Splitting input on a Device is done by simply using certain controls for operating the UI while using others to operate the game. For example, you could use the d-pad on gamepads to operate UI selection while using the sticks for in-game character control. This setup requires adjusting the bindings used by the UI Actions accordingly.

An explicit mode switch is implemented by temporarily switching to UI control while suspending in-game Actions. For example, the left trigger on the gamepad could bring up an item selection wheel which then puts the game in a mode where the sticks are controlling UI selection, the A button confirms the selection, and the B button closes the item selection wheel. No ambiguities arise as in-game actions will not respond while the UI is in the "foreground".

UI Toolkit support

As of Unity 2021.2, UI Toolkit is supported as an alternative to the Unity UI system for implementing UIs in players.

Input support for both Unity UI and UI Toolkit is based on the same EventSystem and BaseInputModule subsystem. In other words, the same input setup based on InputSystemUIInputModule supports input in either UI solution and nothing extra needs to be done.

Internally, UI Toolkit installs an event listener in the form of the PanelEventHandler component which intercepts events that InputSystemUIInputModule sends and translates them into UI Toolkit-specific events that are then routed into the visual tree. If you employ EventSystem.SetUITookitEventSystemOverride, this default mechanism is bypassed.

Note

XR (tracked-type input) is not yet supported in combination with UI Toolkit. This means that you cannot use devices such as VR controllers to operate interfaces created with UI Toolkit.

There are some additional things worth noting:

- UI Toolkit handles raycasting internally. No separate raycaster component is needed like for uGUI. This means that TrackedDeviceRaycaster does not work together with UI Toolkit.

- A pointer click and a gamepad submit action are distinct at the event level in UI Toolkit. This means that if you, for example, do

the handler is not invoked when the button is "clicked" with the gamepad (abutton.RegisterCallback<ClickEvent>(_ => ButtonWasClicked());NavigationSubmitEventand not aClickEvent). If, however, you do

the handle is invoked in both cases.button.clicked += () => ButtonWasClicked();